Executive summary

On Friday 17/08/2018 a security issue was brought to our attention with some of our websites. During the manual fix of the security issues three websites became unavailable for 17 minutes. During that fix one website lost its images and was appearing without any photographs for 12 hours. The following is the detailed report of what went happened, what went wrong and the steps we are taking to ensure that this does not happen again.

Detailed report

On Friday 17/08/2018 during the creation of the colombia and africa sites we accidentally committed to github the secret service keys for the google cloud storage buckets of those websites. We were notified by google a few minutes later. We were depending on a gitignore rule to ignore those files and did not notice them when doing the commit.

Investigation showed that at the same commit we had uploaded the secret key files of GP India, GP Brasil and GP Netherlands websites. The problem that could be cause by that would be that if someone was utilising those keys, they would be able to upload to our google cloud storage (where we store the planet4 images for each site) any file they wanted, and then serve it from our servers.

The process until then was that when a new site was to be created, the developer/sysadmin doing the rollout had to manually create the keys, store them on their local drive, and run a script that would transfer those keys to the circleCI server.

We decided to issue new security keys for the affected websites. This had to be done manually, since there was never a need to have an automatic process for replacing keys. We created the new keys, uploaded them to circleCI and run the “deploy” part of the two part release process (usually “build” and “deploy”). Several things went wrong during this process :

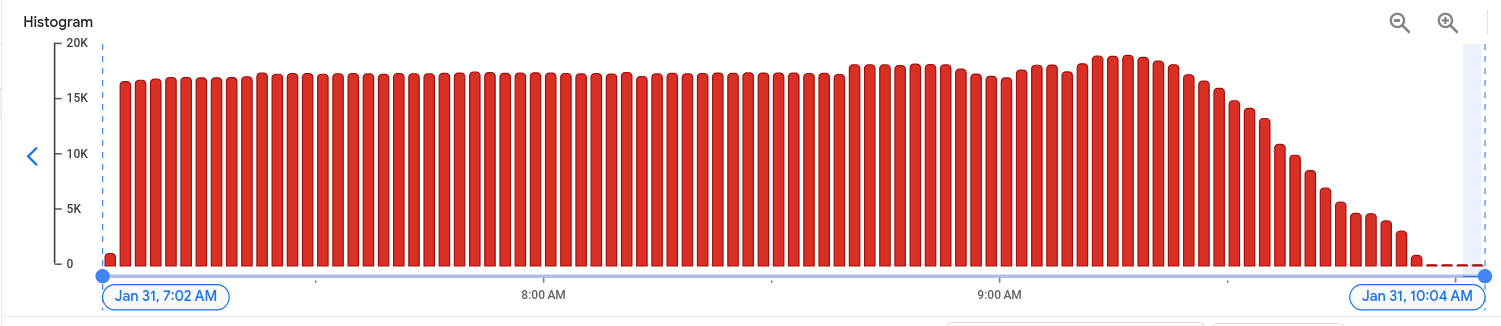

The “deploy” process via Helm recreated the Kubernetes secrets but did not restart the pods (services) which relied on those secrets. In particular, this service was the CloudSQL proxy providing secure access to our DB. This resulted in the websites returning a “Error establishing a database connection” error

This error was identified in the 17 minutes and was fixed for the production sites, resulting the websites becoming available again.

Note: All this happened on Friday afternoon, while the sysadmin who is controlling the process had already finished his shift, resulting in him working much much later. The sysadmin left me with instructions and left for the day (already very late in Australia), saying he would check again first thing in the morning, fix the staging sites and anything else that was needed.

After that moment, the NL team discovered that they did not have any photos. Further investigation showed that while the India and Brasil sites had images appearing, it was not possible to upload images anymore. The problem was identified as the following:

The new deployment, having seen no change in container version, did not trigger a restart of the application pods, and thus did not pick up the new service keys provided by the changed Kubernetes secrets.

The difference between the three sites (Brasil and India showing images, while NL not showing at all), we suspect that it was because in an effort to fix the “I cannot upload images” issue, an editor/admin of the NL site tried to do the “sync images with stateless” process of the wp-stateless plugin. A task that under normal circumstances would create no problems, in this case (with a wrong secret key), caused wordpress to replace all image urls with the local ones (instead of the stateless ones), resulting in the images not being able to appear.

At that moment, and after confirming that the images where in the stateless (aka, they had not been really lost), I decided not to try a “build”+”deploy” procedure (like the other two sites), fearing that a new sync from the wordpress server (Where the images don’t exist) might cause the same images to be deleted from the online server. I discussed that with the NL office, and we decided that it was not so urgent in order to risk this, and we could wait until the next morning, when Ray would be available.

Indeed, Ray fixed the issue, ensuring that the images would not be lost, and at 03:00 Amsterdam time the images where appearing fine.

How this could have been avoided, and steps we are taking to improve the process:

– If secrets management were automated and abstracted via a service such as Hashicorp Vault. [BACKLOG]

– If keys were not being created manually, but instead were being created and transferred to circleCI by the nro-generator script, there would never be a danger of accidentally compromising. To do that Ray has investigated and modified the nro-generator scripts to do that automatically. [WORK IN PROGRESS]

– If we had live-site safe tests to run, and those tests were including “test if a new image can be uploaded, and if it is then available”. Currently all the functional tests we have are intrusive, and are modifying the site they are being run against. We run those tests against a default website but not against live websites. Our plan is to design a new suite of tests that will be able to run against a live website, without modifying it at all. (Even though, the “upload an image” is modifying the website, so discussion has to happen about this). [IN THE PLANS FOR THE FUTURE]

– If more than one sysadmin knew the whole process. At the moment we have one sysadmin knowing everything very well, and parts of the process being known by several other people. The operations team is working for creating redundancy by having more sysadmins knowing the whole process. [WORK IN PROGRESS]

– If pre-commit hooks were in place for susceptible repositories which detect secrets before they are committed. Feasibility: low

– If developers never try to rush code out the door late Friday afternoon: Feasibility: high